Samedia, Dec. 7–Meta has introduced Llama 3.3 70B, the newest member of its generative AI family. The announcement was made by Ahmad Al-Dahle, Meta’s VP of generative AI, via a post on X, where he highlighted the model’s capability to match the performance of Meta’s top-tier Llama 3.1 405B model, but at a fraction of the cost.

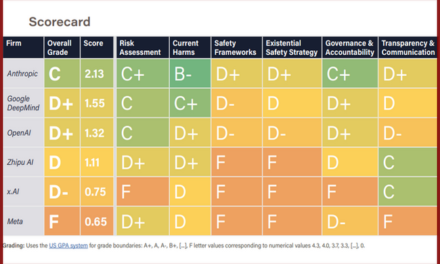

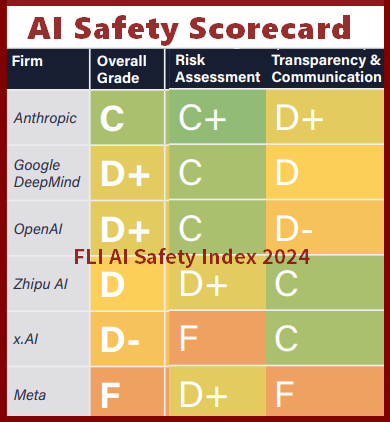

Al-Dahle credited the performance and cost efficiency to advanced post-training techniques, which enhance the core functionalities of the model. “By leveraging the latest advancements in post-training techniques, this model improves core performance at a significantly lower cost,” Al-Dahle stated. He also shared a chart showcasing Llama 3.3 70B outperforming competitors such as Google’s Gemini 1.5 Pro, OpenAI’s GPT-4o, and Amazon’s Nova Pro across various industry benchmarks, including the MMLU, which tests language understanding.

The new Llama 3.3 70B model is available for download on platforms like Hugging Face and the official Llama website, reinforcing Meta’s strategy to provide “open source” models for diverse applications. Despite Meta’s restriction that developers with platforms exceeding 700 million monthly users must obtain a special license, the adoption of Llama models has been robust, with over 650 million downloads reported.

This release coincides with Meta AI nearing 600 million monthly users, a milestone shared by CEO Mark Zuckerberg on Instagram. Meta AI, which launched last fall, had surpassed 500 million users by October, showing rapid growth.

Zuckerberg also hinted at the future, teasing the forthcoming Llama 4 model in a recent Instagram video. He noted that while Llama 3.3 70B is the last major update for the year, the next iteration, Llama 4, is already in the works with an expected early next year release for one of its “smaller” models, which is being trained on a cluster of more than 100,000 H100s.

Meta’s aggressive push in the AI domain continues to solidify its position, setting the stage for a competitive edge in the generative AI market.