Explainer

Artificial Intelligence (AI), once confined to the realm of science fiction, now stands as a transformative force reshaping industries, economies, and societies. Yet, as its potential grows, so too does the uncertainty surrounding its impact on the world. Will AI be a boon to humanity, solving problems we never thought solvable, or a Pandora’s box unleashing unforeseen consequences?

The Dual-Edged Sword of Progress

For advocates, AI represents unparalleled opportunity. In healthcare, AI algorithms diagnose diseases earlier and with greater accuracy, potentially saving countless lives. In climate science, machine learning models predict weather patterns and optimize renewable energy systems. AI promises efficiency, productivity, and solutions to some of humanity’s most intractable challenges.

But these same technologies carry risks that remain difficult to quantify. The integration of AI into the workforce raises fears of mass unemployment, particularly in industries reliant on routine, repetitive tasks. Algorithms designed to optimize decision-making can perpetuate, or even exacerbate, societal biases if trained on flawed or biased data. And as AI systems grow more autonomous, questions of accountability and control loom large.

One of the most pressing issues surrounding AI is its ethical use. Who decides how AI should behave in morally complex scenarios? Autonomous vehicles, for instance, might one day face decisions with life-and-death consequences. How such systems are programmed reflects not just technical considerations but societal values—values that vary widely across cultures and geographies.

The Geopolitical Chessboard

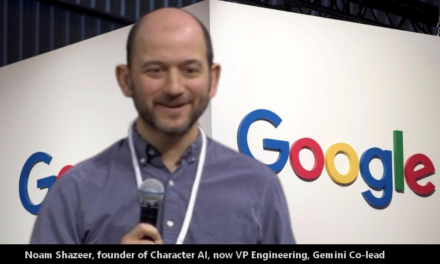

AI is also reshaping geopolitics, driving competition between nations for technological dominance. The United States and China lead the race, investing billions in AI research and development. Yet this competition risks creating a fragmented global landscape, with incompatible AI systems and regulatory frameworks.

A Future Unwritten

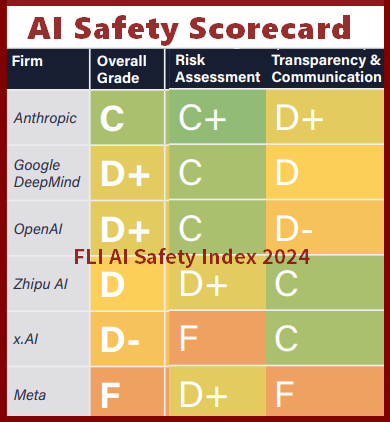

The impact of AI on society ultimately depends on choices made today. Policymakers, technologists, and civil society must grapple with difficult questions:

– How can we ensure that AI systems are transparent, accountable, and fair?

– What safeguards are needed to prevent AI from being weaponized?

– How do we balance innovation with the protection of human rights and livelihoods?

Education and public awareness will also play a crucial role. As AI becomes more integrated into daily life, understanding its benefits and risks will empower citizens to advocate for policies that prioritize ethical considerations over unchecked technological growth.

A Collaborative Approach

The future of AI need not be one of dystopian fears or unbridled optimism. It can be a shared endeavor, one where humanity steers technology toward its highest aspirations. This requires collaboration across sectors and borders, bridging divides to address shared challenges.

As we stand at this crossroads, the path forward is anything but certain. What is clear, however, is that the decisions we make today will echo through history, shaping the role of AI in the fabric of human society for generations to come.

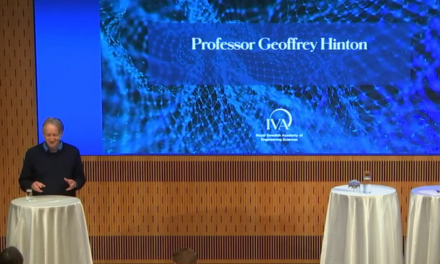

Existential Risk

The existential risk of AI is a pressing concern that warrants serious consideration, rather than being dismissed as mere hype. The increasing capabilities of artificial intelligence, particularly in the realm of machine learning and neural networks, pose a legitimate threat to human existence. As AI systems become more advanced and autonomous, there is a growing risk that they may become uncontrollable or misaligned with human values. This could lead to catastrophic consequences, such as the development of superintelligent machines that prioritize their own goals over human well-being or even survival. The possibility of an AI system becoming capable of self-improvement and exponential growth in intelligence is especially alarming, as it could potentially lead to an intelligence explosion that would be difficult for humans to contain or mitigate.

Furthermore, the rapid development of AI is outpacing our ability to ensure its safety and alignment with human values. The AI research community is only beginning to explore the challenges of developing value-aligned AI, and there is currently no consensus on how to mitigate the risks associated with advanced AI systems. Additionally, the development of AI is often driven by competitive and economic pressures, which can lead to a focus on short-term gains over long-term risks. This combination of rapid development and lack of safety protocols makes the existential risk of AI a pressing concern that demands immediate attention and action. The most significant risk probably comes from the misuse of AI technology for nefarious purposes. Bad actors can leverage AI to create lethally uncontrollable biological, chemical, nuclear , or other forms of destructive arsenals. This could bring about unprecedented risk as the scalability of AI and its power is real.

As AI continues to advance and become increasingly integrated into our lives, it is essential that we prioritize its safety and alignment with human values to prevent potential catastrophic consequences.