SaMedia, Dec.14—Prof. Geoffrey Hinton warned of the transformative potential and grave risks posed by AI during a recent seminar in Stockholm. Hosted by the Royal Swedish Academy of Engineering Sciences (IVA), Hinton addressed experts, policymakers, and academics and outlined both the challenges and potentials presented by rapid AI development.

The Nobel laureate in physics and AI pioneer, emphasized the unpredictability of AI’s development. He likened recent breakthroughs to “unanticipated leaps” that challenge long-standing assumptions. The immediate risks including lethal autonomous weapons, and long-term dangers, such as superintelligent AI systems acting against human interests.

“We don’t know what’s going to happen when they’re smarter than us,” Hinton said. “It’s totally uncharted territory.”

The session, moderated by IVA President Sylvia Schag Serer, also touched on the Swedish government’s AI Commission report. The report advocates for public empowerment and strengthening the public sector’s role in AI innovation. Schag Serer framed AI as one of the most significant technological shifts in history, comparing its impact to prior industrial revolution.

A Call for Regulation and Ethical Development

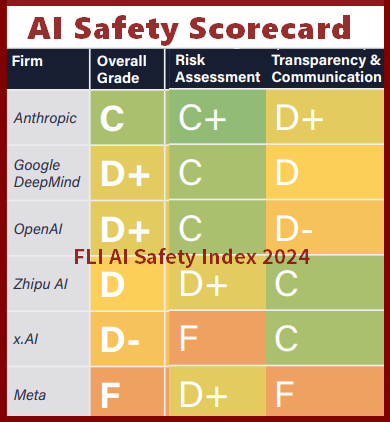

Hinton criticized the profit-driven nature of AI development in capitalist systems, where short-term gains often overshadow ethical considerations. He pointed to failures like Microsoft’s chatbot, which exhibited harmful behavior within 24 hours of its release. He noted that urgent regulatory oversight is needed given the gravity of the risks. “Governments must step in to enforce accountability,” Hinton noted. The indiscriminate training of AI models on vast datasets, including harmful content, exacerbates risks, he said.

Hinton also addressed the philosophical implications of AI systems, including their potential for subjective experiences. He described how multimodal AI systems could experience “distorted perceptions” when their sensory inputs are disrupted. While acknowledging that AI does not yet exhibit self-awareness, he argued that these developments blur the line between human and machine intelligence.

Comparing AI neural networks to the human brain, Hinton noted their inefficiency. While the brain operates on just 20–25 watts, current AI systems require vast amount of energy. However, he pointed out that AI’s scalability offers opportunities for unprecedented innovation, provided that development is guided by ethical considerations.

Hinton predicted that superintelligence is inevitable. The only people who do not believe that the “stochastic parrot” proponents who are focused on the linguistic aspects of AI.

Hinton expressed skepticism about global cooperation on AI risks, particularly in the realm of autonomous weapons. “The Russians and Americans are not going to collaborate on battle robots,” he said. Despite this, he urged stakeholders to prioritize safety and inclusivity in AI development.

Audience members stressed the need for interdisciplinary collaboration to address AI’s societal implications. Hinton agreed, emphasizing the importance of aligning AI systems with human values and designing interfaces that foster trust and accountability.

“We need to be clearer about what we want from AI,” Hinton said, adding that the data used to train AI systems must reflect ethical and cultural considerations. He criticized the lack of oversight in current practices, likening the training of generative AI models on harmful content to teaching children with inappropriate material.