Samedia, Dec. 13–A damning new report exposes major safety failures among AI industry leaders, highlighting a dangerous lack of oversight and preparation for advanced AI risks.

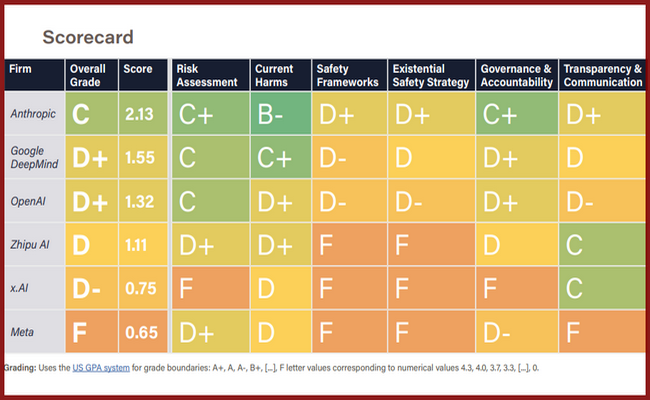

The Future of Life Institute (FLI) has released its 2024 AI Safety Index. It reveals widespread inadequacies in how leading companies like Anthropic, OpenAI, Google DeepMind, and Meta manage advanced artificial intelligence risks. The findings suggest that critical safety practices are being neglected for profit.

“Companies are falling short on even the most basic safety precautions,” the report states. It warns that no firm has developed a “robust strategy for ensuring that advanced AI systems remain controllable and aligned with human values.”

The independent review panel included top AI experts like Yoshua Bengio, a Turing Award laureate, and Stuart Russell, a pioneer in AI ethics. Their findings were based on public data, company surveys, and assessments of 42 indicators across six domains, including Risk Assessment, Governance, and Existential Safety Strategies.

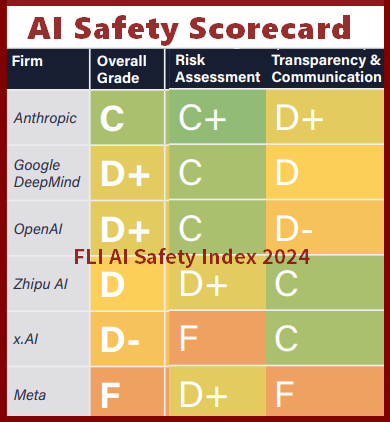

Failing Grades Across the Board Most companies performed poorly in the evaluation. Anthropic led with a “C” grade, while Meta earned an “F,” the lowest score.

The report’s scorecard highlights serious vulnerabilities:

- Anthropic, while leading, had weaknesses in transparency and governance.

- Google DeepMind and OpenAI scored poorly for existential safety strategies, with insufficient plans to address catastrophic risks.

- Meta was singled out for enabling misuse by publishing the weights of advanced models, making them easier to exploit maliciously.

“All flagship models were found to be vulnerable to jailbreaks and adversarial attacks,” the report states. These vulnerabilities pose serious risks to public safety.

Transparency and Accountability Lacking

The report criticizes the lack of transparency in safety practices. While Anthropic allowed pre-deployment testing by third-party evaluators, companies like OpenAI and Meta resisted meaningful external oversight.

“Without independent validation, companies cannot be trusted to accurately assess their own risks,” the review panel wrote. It called for mandatory external evaluations and public disclosures.

Existential Risks Poorly Managed

The report raised alarms over the industry’s handling of existential safety. Despite public commitments to addressing risks from artificial general intelligence (AGI), no company presented an actionable plan.

“Technical research on control, alignment, and interpretability for advanced AI systems is immature and inadequate,” the report states. Current strategies are unlikely to prevent catastrophic outcomes.

The panel noted that profit incentives are driving companies to cut corners. “In the absence of oversight, safety becomes secondary to revenue generation,” the report states.

FLI President Max Tegmark called the findings a wake-up call. “We’re rushing to deploy powerful AI systems without sufficient guardrails,” he said. “The consequences of this negligence could be catastrophic.”

The report urges a collective effort to address these deficiencies. Recommendations include:

Mandatory external oversight for all high-risk AI deployments. Development of comprehensive safety frameworks that define clear risk thresholds and mitigation strategies.

Greater transparency through public disclosures and third-party evaluations. The 2024 AI Safety Index highlights the urgent need for stronger safety measures in AI development. With no company adequately addressing advanced AI risks, the report warns that the race to deploy increasingly powerful systems is outpacing safety efforts.

The findings are expected to intensify debates on AI regulation. FLI is calling on governments, researchers, and companies to act before it’s too late. “The stakes couldn’t be higher,” Tegmark said. “We must get this right.”